We are in the process of curating a list of this year’s publications — including links to social media, lab websites, and supplemental material. Currently, we have 70 full papers, 26 LBWs, three Journal papers, one alt.chi paper, two SIG, two Case Studies, two Interactivities, one Student Game Competition, and we lead three workshops. One paper received a best paper award and 14 papers received an honorable mention.

Disclaimer: This list is not complete yet; the DOIs might not be working yet.

Your publication from 2025 is missing? Please enter the details in this Google Forms and send us an email that you added a publication: contact@germanhci.de

OpenEarable 2.0: An AI-Powered Ear Sensing Platform

Tobias Röddiger (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany roeddiger@teco.edu), Valeria Zitz (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany valeria.zitz@kit.edu), Jonas Hummel (Karlsruhe Institute of Technology (KIT), TECO / Pervasive Computing Systems, Karlsruhe, Germany jonas.hummel@kit.edu), Michael Küttner (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany michael.kuettner@kit.edu), Philipp Lepold (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Baden-Wuertemberg, Germany philipp.lepold@kit.edu), Tobias King (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany king@teco.edu), Joseph A Paradiso (MIT Media Lab, Massachusetts Institute of Technology, Cambridge, Massachusetts, USA paradiso@mit.edu), Christopher Clarke (University of Bath, Bath, United Kingdom cjc234@bath.ac.uk), Michael Beigl (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany michael.beigl@kit.edu)

Abstract | Tags: Interactivity | Links:

@inproceedings{Roeddiger2025Openearable20,

title = {OpenEarable 2.0: An AI-Powered Ear Sensing Platform},

author = {Tobias Röddiger (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany roeddiger@teco.edu), Valeria Zitz (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany valeria.zitz@kit.edu), Jonas Hummel (Karlsruhe Institute of Technology (KIT), TECO / Pervasive Computing Systems, Karlsruhe, Germany jonas.hummel@kit.edu), Michael Küttner (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany michael.kuettner@kit.edu), Philipp Lepold (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Baden-Wuertemberg, Germany philipp.lepold@kit.edu), Tobias King (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany king@teco.edu), Joseph A Paradiso (MIT Media Lab, Massachusetts Institute of Technology, Cambridge, Massachusetts, USA paradiso@mit.edu), Christopher Clarke (University of Bath, Bath, United Kingdom cjc234@bath.ac.uk), Michael Beigl (TECO / Pervasive Computing Systems, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany michael.beigl@kit.edu)},

url = {https://www.teco.edu/, website

https://www.linkedin.com/in/michael-beigl/, lab's linkedin

https://www.linkedin.com/in/tobiasroeddiger/, author's linkedin

https://open-earable.teco.edu/, social media},

doi = {10.1145/3706599.3721161},

year = {2025},

date = {2025-04-25},

urldate = {2025-04-25},

abstract = {In this demo, we present OpenEarable 2.0, an open-source earphone platform designed to provide an interactive exploration of physiological ear sensing and the development of AI applications. Attendees will have the opportunity to explore real-time sensor data and understand the capabilities of OpenEarable 2.0’s sensing components. OpenEarable 2.0 integrates a rich set of sensors, including two ultrasound-capable microphones (inward/outward), a 3-axis ear canal accelerometer/bone conduction microphone, a 9-axis head inertial measurement unit, a pulse oximeter, an optical temperature sensor, an ear canal pressure sensor, a microSD slot, and a microcontroller. Participants will be able to try out the web-based dashboard and mobile app for real-time control and data visualization. Furthermore, the demo will show different applications and real-time data based on OpenEarable 2.0 across physiological sensing and health monitoring, movement and activity tracking, and human-computer interaction.},

keywords = {Interactivity},

pubstate = {published},

tppubtype = {inproceedings}

}

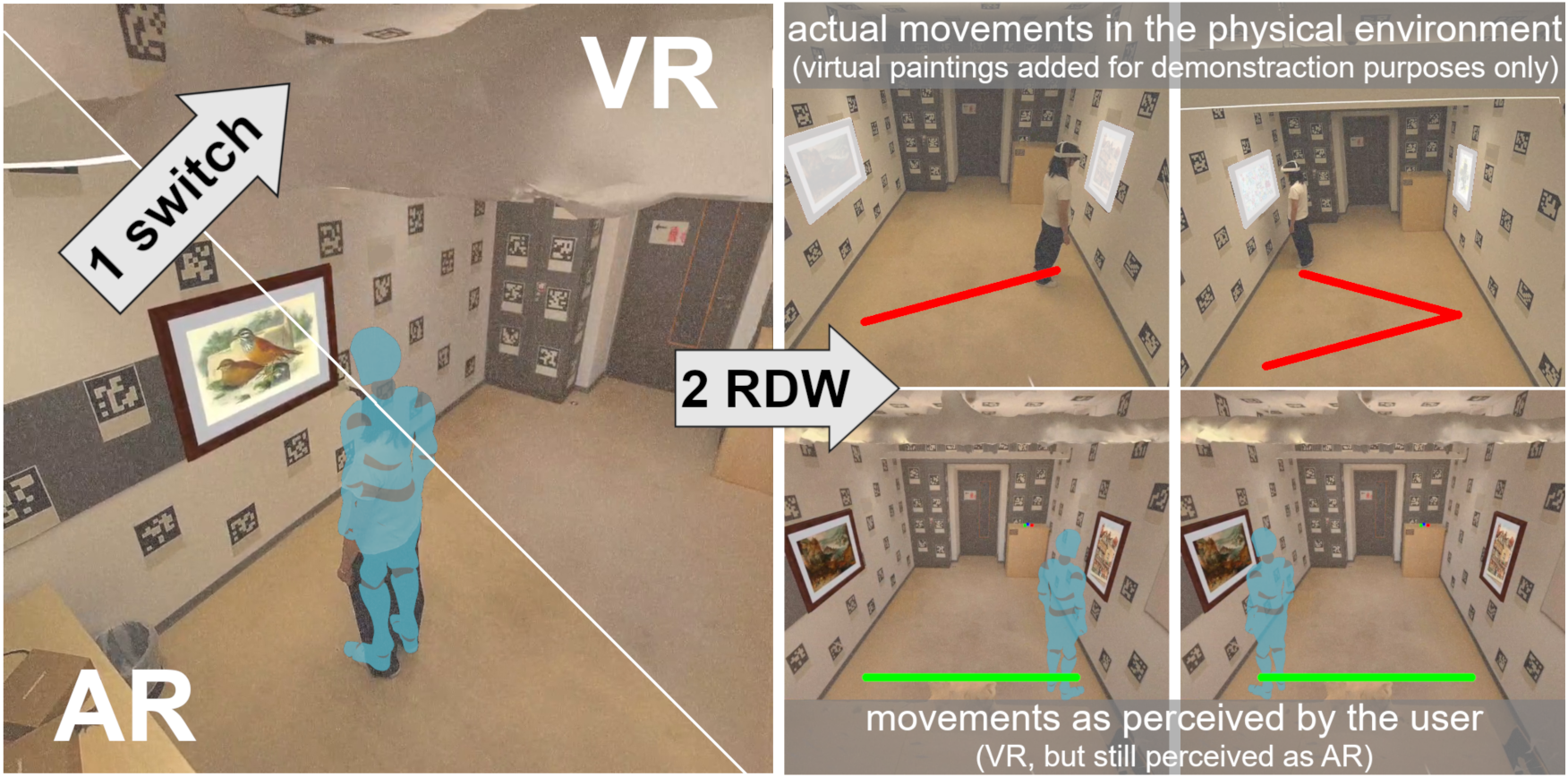

SwitchAR: Enabling Perceptual Manipulations in Augmented Reality Leveraging Change Blindness and Inattentional Blindness

Jonas Wombacher (TU Darmstadt, Darmstadt, Germany), Zhipeng Li (Department of Computer Science, ETH Zurich, Zurich, Switzerland), Jan Gugenheimer (TU Darmstadt, Darmstadt, Germany; Institut Polytechnique de Paris, Télécom Paris - LTCI, Paris, France)

Abstract | Tags: Interactivity | Links:

@inproceedings{Wombacher2025Switchar,

title = {SwitchAR: Enabling Perceptual Manipulations in Augmented Reality Leveraging Change Blindness and Inattentional Blindness},

author = {Jonas Wombacher (TU Darmstadt, Darmstadt, Germany), Zhipeng Li (Department of Computer Science, ETH Zurich, Zurich, Switzerland), Jan Gugenheimer (TU Darmstadt, Darmstadt, Germany; Institut Polytechnique de Paris, Télécom Paris - LTCI, Paris, France)},

url = {https://www.teamdarmstadt.de/, website

https://youtu.be/s83PNZHe9jY, teaser video},

doi = {10.1145/3706599.3721159},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Perceptual manipulations (PMs) like redirected walking (RDW) are frequently applied in Virtual Reality (VR) to overcome technological limitations. These PMs manipulate the user’s visual perceptions (e.g. rotational gains), which is currently challenging in Augmented Reality (AR). We propose SwitchAR, a PM for video pass-through AR leveraging change and inattentional blindness to imperceptibly switch between the camera stream of the real environment and a 3D reconstruction. This enables VR redirection techniques in what users still perceive as AR. We present our pipeline consisting of (1) Reconstruction, (2) Switch (AR -> VR), (3) PM and (4) Switch (VR -> AR), together with a prototype implementing this pipeline. SwitchAR is a fundamental basis enabling AR PMs.},

keywords = {Interactivity},

pubstate = {published},

tppubtype = {inproceedings}

}